Getting Started¶

Making an environment¶

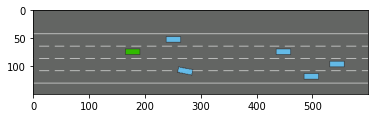

Here is a quick example of how to create an environment:

import gym

import highway_env

from matplotlib import pyplot as plt

%matplotlib inline

env = gym.make('highway-v0')

env.reset()

for _ in range(3):

action = env.action_type.actions_indexes["IDLE"]

obs, reward, done, info = env.step(action)

env.render()

plt.imshow(env.render(mode="rgb_array"))

plt.show()

All the environments¶

Here is the list of all the environments available and their descriptions:

Configuring an environment¶

The observations, actions, dynamics and rewards

of an environment are parametrized by a configuration, defined as a

config dictionary.

After environment creation, the configuration can be accessed using the

config attribute.

import pprint

env = gym.make("highway-v0")

pprint.pprint(env.config)

{'action': {'type': 'DiscreteMetaAction'},

'centering_position': [0.3, 0.5],

'collision_reward': -1,

'duration': 40,

'initial_spacing': 2,

'lanes_count': 4,

'manual_control': False,

'observation': {'type': 'Kinematics'},

'offscreen_rendering': False,

'other_vehicles_type': 'highway_env.vehicle.behavior.IDMVehicle',

'policy_frequency': 1,

'render_agent': True,

'scaling': 5.5,

'screen_height': 150,

'screen_width': 600,

'show_trajectories': False,

'simulation_frequency': 15,

'vehicles_count': 50}

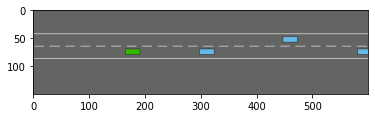

For example, the number of lanes can be changed with:

env.config["lanes_count"] = 2

env.reset()

plt.imshow(env.render(mode="rgb_array"))

plt.show()

Training an agent¶

Reinforcement Learning agents can be trained using libraries such as rl-agents, baselines or stable-baselines.

The highway-parking-v0 environment trained with HER.¶

import gym

import highway_env

import numpy as np

from stable_baselines import HER, SAC, DDPG, TD3

from stable_baselines.ddpg import NormalActionNoise

env = gym.make("parking-v0")

# Create 4 artificial transitions per real transition

n_sampled_goal = 4

# SAC hyperparams:

model = HER('MlpPolicy', env, SAC, n_sampled_goal=n_sampled_goal,

goal_selection_strategy='future',

verbose=1, buffer_size=int(1e6),

learning_rate=1e-3,

gamma=0.95, batch_size=256,

policy_kwargs=dict(layers=[256, 256, 256]))

model.learn(int(2e5))

model.save('her_sac_highway')

# Load saved model

model = HER.load('her_sac_highway', env=env)

obs = env.reset()

# Evaluate the agent

episode_reward = 0

for _ in range(100):

action, _ = model.predict(obs)

obs, reward, done, info = env.step(action)

env.render()

episode_reward += reward

if done or info.get('is_success', False):

print("Reward:", episode_reward, "Success?", info.get('is_success', False))

episode_reward = 0.0

obs = env.reset()

Examples on Google Colab¶

Use these notebooks to train driving policies on highway-env.

A Model-based Reinforcement Learning tutorial on Parking

A tutorial written for RLSS 2019 and demonstrating the principle of model-based reinforcement learning on the parking-v0 task.

Trajectory Planning on Highway

Plan a trajectory on highway-v0 using the OPD [HM08] implementation from rl-agents.

Parking with Hindsight Experience Replay

Train a goal-conditioned parking-v0 policy using the [AWR+17] implementation from stable-baselines.